This page is meant to give you a slightly brighter picture on these concepts of cluster and driver/worker nodes. However, for a detailed explanation, you might need to look out for posts in Spark Category.

Let’s begin.

What is a cluster?

Cluster in databricks is a set of computation resources on which you run notebooks and jobs.

Concept of Driver and Worker Nodes

These are the two nodes that are responsible for all the work that is done on Spark(majorly so). The driver node acts as the central control point, orchestrating tasks and coordinating execution, while worker nodes perform the actual computations and data processing.

Driver Node

- The driver node is the main process that runs the Spark application.

- It is responsible for creating the SparkContext, defining the application’s logic and breaking down the workload into smaller tasks.

- The driver node schedules tasks and coordinates their execution on the worker nodes.

Worker Node

- Worker nodes are the physical or virtual machines where the actual computations are performed.

They receive tasks from the driver node and execute them. - Each worker node can have multiple executors running on it.

- Executors are processes that run tasks and manage data storage on the worker nodes.

- Worker nodes communicate with the driver node to report progress and status.

The driver node is the brains of the operation, coordinating the work, while the worker nodes are the muscle, doing the heavy lifting of data processing.

Databricks Runtime

Databricks runtime is the set of software components that run on Databricks clusters, including Apache Spark and other libraries, providing a platform for big data analytics with improved usability, performance and security. It includes – delta lake, libraries,GPU support, Azure databricks services etc.

Whole Stage Java Code Generation

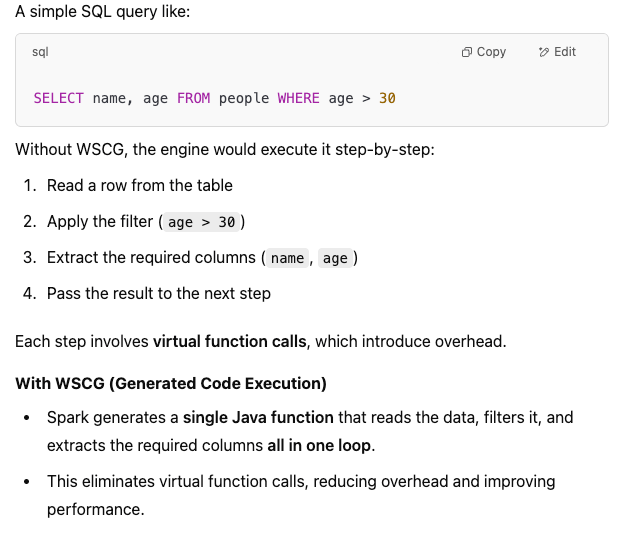

Whole-Stage Code Generation is an optimization tehnique in Spark SQL that improves query performance by reducing interpretation overhead. Instead of executing queries using an interpreter(which processes each step one by one), Spark generates optimized Java bytecode for the entire query pipeline and compiles it at runtime.

When you submit a SQL query in Spark, it is first converted into a logical plan, and then optimized into a physical plan.

Instead of executing each operation using an interpreter, Spark generates Java source code for the entire stage of execution. This code is then compiled into Java bytecode using Janion, a light-weight just-in-time compiler.

Normally, each operator would call functions separately, adding overhead. WSCG removes these function calls by merging multiple operators into a single function – hence the name Whole-Stage Code Generation.

When and When Not to Use Photon Accelerators

Photon accelerator is ideal for improving performance in Spark SQL and Dataframe based workloads on Databricks.

| Use Photon | Do not use Photon |

| if your workloads involve heavy SQL transformations, aggregations, and joins. | if your workl0ads are mostly low level RDD operations(Photon does not accelerate RDD based code) |

| if you rely on SQL queries or Dataframe transformations in databricks. | |

| if your spark jobs are slow due to CPU bound query execution, Photon helps by leveraging SIMD vectorization and native execution. It uses CPU cycles per operation and lowers query execution time. | If your bottleneck is I/O e.g. slow disk or network reads rather than CPU. |

| you are running analytical queries over terabytes or petabytes of data. It works best on OLAP style queries, where large datasets are scanned, filtered and aggregated. | If you workload is small and does not require heavy processing. |

| if you want better performance at a lower cost. | if you are already running cheap, low-priority batch jobs where speed is not a concern. |

Happy Learning 🙂